Behind the scenes of the systematic review

Posted:

9 May 2022, 1:53 p.m.

Author:

-

Harry Bregazzi

Postdoctoral Research Fellow, University of Oxford

Harry Bregazzi

Postdoctoral Research Fellow, University of Oxford

Topics:

Impact bonds, Outcomes-based approachesPolicy areas:

EducationTypes:

Global Systematic Review

In our ongoing systematic review of outcomes-based contracts, the Government Outcomes Lab and Ecorys aim to produce the deepest and broadest investigation to date of the available evidence. Once the relevant studies have been collated and organised, they will form a rich body of evidence from which we can synthesise key findings for practitioner and policy audiences, as well as identify new opportunities for academic research on the topic. Getting to that point, however, is not a quick or straightforward process!

In our ongoing systematic review of outcomes-based contracts, the Government Outcomes Lab and Ecorys aim to produce the deepest and broadest investigation to date of the available evidence. Once the relevant studies have been collated and organised, they will form a rich body of evidence from which we can synthesise key findings for practitioner and policy audiences, as well as identify new opportunities for academic research on the topic. Getting to that point, however, is not a quick or straightforward process!

To mark the publication of the first output informed by the review – a World Bank report on education impact bonds in low- and middle-income countries – this blog shines a light on 18 months of ‘behind the scenes’ effort that has gone into producing it. We are delighted that the project is beginning to generate policy-relevant insights; this blog aims to demonstrate the rigor and reliability of the work that underpins them.

Searching for and screening the evidence

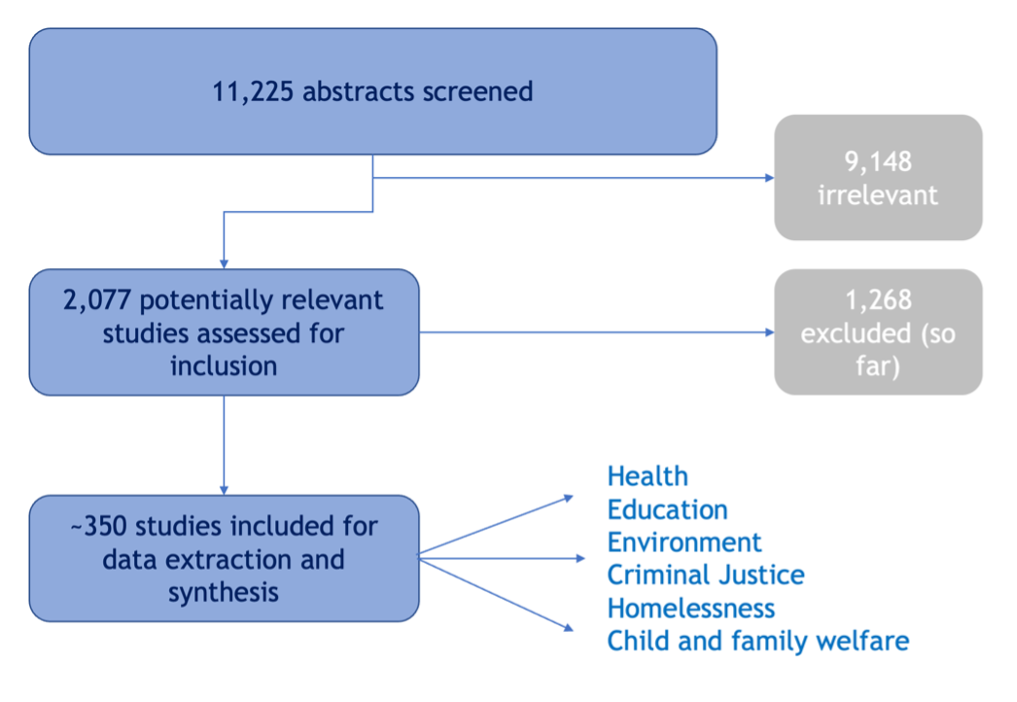

Unlike more conventional literature reviews, a systematic review aims to be exhaustive – that is, to identify every existing publication on a specific topic from within a defined time period. For our review, this means identifying all empirical studies of outcomes-based interventions published between 1990 and 2020. In pursuit of such a goal, the systematic review began with a thorough search strategy. A list of key terms was developed in consultation with a Policy Advisory Group, which were then used to search in twelve bibliographic databases. Any publication within these databases that could possibly be considered relevant to outcomes-based service delivery was returned to us for closer inspection. In addition, we ran 192 Google searches, to ensure that we captured so-called ‘grey literature’ – i.e., research and evaluations produced outside of traditional academic publications. Much of the practitioner focused material that we wanted to include in the review is among the grey literature. We also issued a call for evidence, soliciting submissions of further research that might not have been picked up in the database or Google searches. Ultimately, the process returned 11,225 studies.

Casting such a wide net in the search strategy inevitably captures many studies that are not in fact relevant to the review’s purpose. This necessitates screening – the process of removing any studies that obviously do not relate to the topic that we are studying. A team of 12 reviewers read the titles and abstracts of every study, either excluding them as irrelevant, or passing them through to the next stage of the review process. Each study was screened twice, by a different member of the review team, meaning a total of 22,500 individual assessments and decisions.

At the end of months of screening, we had narrowed down our selection to 2,077 potentially relevant studies. But the assessment process did not end there. While we could be confident of their potential relevance to our review, we still did not know if the remaining studies met our more detailed inclusion criteria, which contain specific requirements regarding empirical content, and whether or not sufficient contract detail is provided. To assess the studies against these criteria, the team set about surveying the full texts, and on that basis made a final decision on whether to include or exclude each study. As with the abstract screening, each study was double-reviewed. If two reviewers disagreed in their assessment of a study, it was sent to a third, senior, member of the team, who examined the text and cast the deciding vote.

At the time of writing, this second, more detailed, assessment process is just about completed. After a year and a half of systematic screening and reviewing, we have a record of over 300 empirical studies that we can be confident represent the forefront of collective knowledge on outcomes-based contracting.

At times, the process of screening and assessing thousands of studies felt never-ending. Which, in fact, it is! New studies of outcomes-based contracting are regularly being published. We aim to keep our review database current by periodically re-running searches and incorporating newly published evidence. In an exciting development to this end, the GO Lab is working with partners at The Alan Turing Institute to harness the power of machine learning, which we hope will automate aspects of the assessment process just outlined, and so greatly assist with keeping the review up-to-date.

Data extraction and synthesis of findings

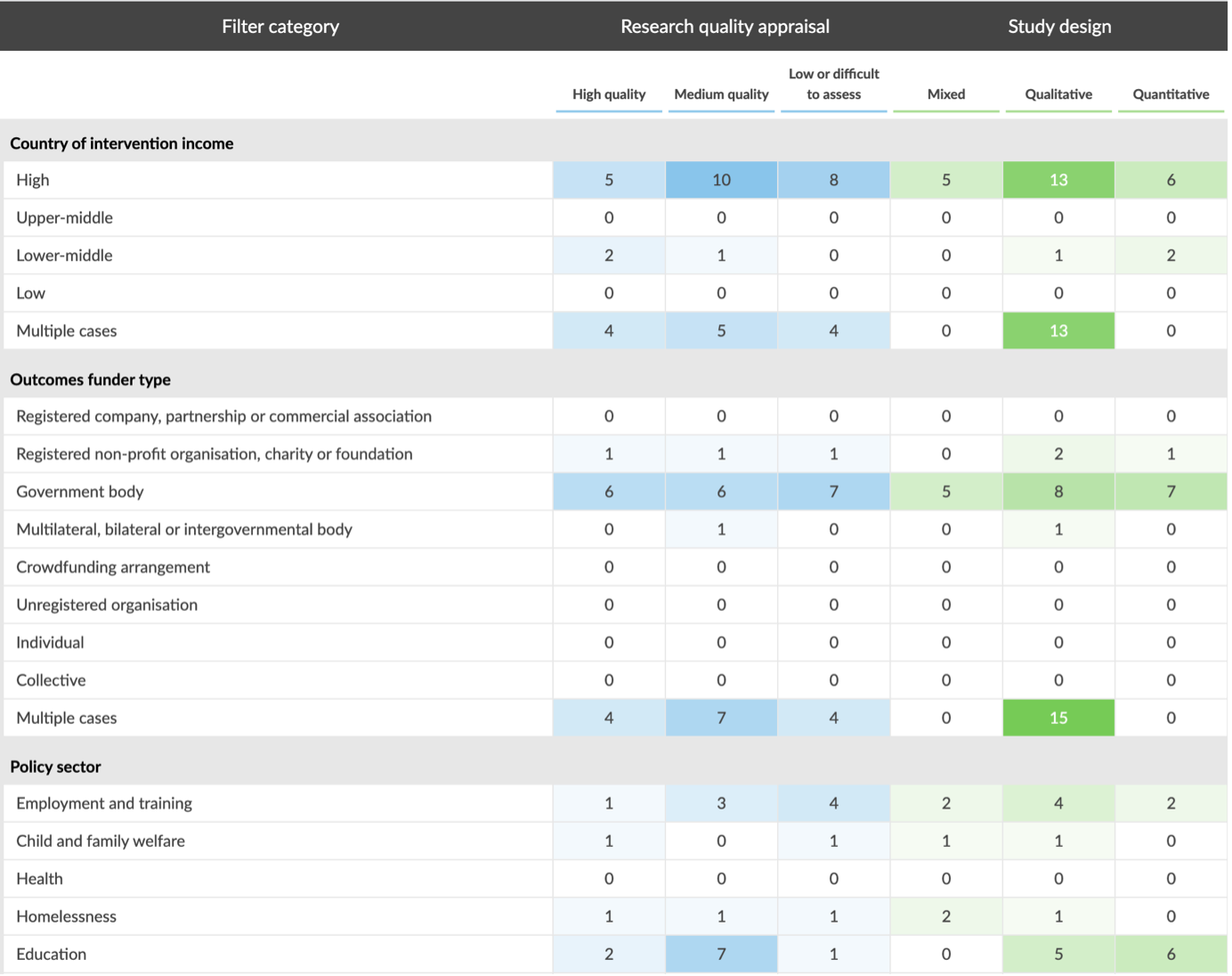

Once the collection of included studies is established, it becomes a resource that can inform numerous policy and research questions regarding outcomes-based contracting of public services. This is the point at which all of the effort pays off - we can begin extracting key data from the studies and synthesising their findings. An early insight to be had is a greater understanding of the evidence landscape. By extracting a uniform set of variables from every study – policy area, country of intervention, funders and service providers, etc. – a comprehensive picture of the available evidence is built. It can then be organised for easier navigation, including in interactive tools like our evidence ‘heatmap’ (under development).

In addition to broad overviews, the studies can be utilised for more detailed enquiries into specific themes. Our first output, for example, focuses on the studies relating to education. Similarly, we have just completed a review of the evidence on outcomes-based environmental projects, considering their potential role in climate change mitigation and adaptation. This second report will be published within a few months. The exhaustive nature of the systematic method means we can be confident that the analyses are based on all of the available evidence.

The systematic review therefore has great potential for generating new insights, policy recommendations, and avenues for further research into outcomes-based contracting. If you are a policy-lead who would like bespoke analysis of the leading studies in outcomes-based contracting in your field, or a researcher who would like to collaborate on analysis, please get in touch with the GO Lab’s Research Director, Dr Eleanor Carter.

Further information about the review process and method, including details of the inclusion criteria, can be found in our open-access protocol paper. Any general comments or questions about the systematic review can be sent to Dr Harry Bregazzi.